- 14.1 来自Bitly的USA.gov数据

- 用纯Python代码对时区进行计数

- 用pandas对时区进行计数

14.1 来自Bitly的USA.gov数据

2011年,URL缩短服务Bitly跟美国政府网站USA.gov合作,提供了一份从生成.gov或.mil短链接的用户那里收集来的匿名数据。在2011年,除实时数据之外,还可以下载文本文件形式的每小时快照。写作此书时(2017年),这项服务已经关闭,但我们保存一份数据用于本书的案例。

以每小时快照为例,文件中各行的格式为JSON(即JavaScript Object Notation,这是一种常用的Web数据格式)。例如,如果我们只读取某个文件中的第一行,那么所看到的结果应该是下面这样:

In [5]: path = 'datasets/bitly_usagov/example.txt'In [6]: open(path).readline()Out[6]: '{ "a": "Mozilla\\/5.0 (Windows NT 6.1; WOW64) AppleWebKit\\/535.11(KHTML, like Gecko) Chrome\\/17.0.963.78 Safari\\/535.11", "c": "US", "nk": 1,"tz": "America\\/New_York", "gr": "MA", "g": "A6qOVH", "h": "wfLQtf", "l":"orofrog", "al": "en-US,en;q=0.8", "hh": "1.usa.gov", "r":"http:\\/\\/www.facebook.com\\/l\\/7AQEFzjSi\\/1.usa.gov\\/wfLQtf", "u":"http:\\/\\/www.ncbi.nlm.nih.gov\\/pubmed\\/22415991", "t": 1331923247, "hc":1331822918, "cy": "Danvers", "ll": [ 42.576698, -70.954903 ] }\n'

Python有内置或第三方模块可以将JSON字符串转换成Python字典对象。这里,我将使用json模块及其loads函数逐行加载已经下载好的数据文件:

import jsonpath = 'datasets/bitly_usagov/example.txt'records = [json.loads(line) for line in open(path)]

现在,records对象就成为一组Python字典了:

In [18]: records[0]Out[18]:{'a': 'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/535.11 (KHTML, like Gecko)Chrome/17.0.963.78 Safari/535.11','al': 'en-US,en;q=0.8','c': 'US','cy': 'Danvers','g': 'A6qOVH','gr': 'MA','h': 'wfLQtf','hc': 1331822918,'hh': '1.usa.gov','l': 'orofrog','ll': [42.576698, -70.954903],'nk': 1,'r': 'http://www.facebook.com/l/7AQEFzjSi/1.usa.gov/wfLQtf','t': 1331923247,'tz': 'America/New_York','u': 'http://www.ncbi.nlm.nih.gov/pubmed/22415991'}

用纯Python代码对时区进行计数

假设我们想要知道该数据集中最常出现的是哪个时区(即tz字段),得到答案的办法有很多。首先,我们用列表推导式取出一组时区:

In [12]: time_zones = [rec['tz'] for rec in records]---------------------------------------------------------------------------KeyError Traceback (most recent call last)<ipython-input-12-db4fbd348da9> in <module>()----> 1 time_zones = [rec['tz'] for rec in records]<ipython-input-12-db4fbd348da9> in <listcomp>(.0)----> 1 time_zones = [rec['tz'] for rec in records]KeyError: 'tz'

晕!原来并不是所有记录都有时区字段。这个好办,只需在列表推导式末尾加上一个if ‘tz’in rec判断即可:

In [13]: time_zones = [rec['tz'] for rec in records if 'tz' in rec]In [14]: time_zones[:10]Out[14]:['America/New_York','America/Denver','America/New_York','America/Sao_Paulo','America/New_York','America/New_York','Europe/Warsaw','','','']

只看前10个时区,我们发现有些是未知的(即空的)。虽然可以将它们过滤掉,但现在暂时先留着。接下来,为了对时区进行计数,这里介绍两个办法:一个较难(只使用标准Python库),另一个较简单(使用pandas)。计数的办法之一是在遍历时区的过程中将计数值保存在字典中:

def get_counts(sequence):counts = {}for x in sequence:if x in counts:counts[x] += 1else:counts[x] = 1return counts

如果使用Python标准库的更高级工具,那么你可能会将代码写得更简洁一些:

from collections import defaultdictdef get_counts2(sequence):counts = defaultdict(int) # values will initialize to 0for x in sequence:counts[x] += 1return counts

我将逻辑写到函数中是为了获得更高的复用性。要用它对时区进行处理,只需将time_zones传入即可:

In [17]: counts = get_counts(time_zones)In [18]: counts['America/New_York']Out[18]: 1251In [19]: len(time_zones)Out[19]: 3440

如果想要得到前10位的时区及其计数值,我们需要用到一些有关字典的处理技巧:

def top_counts(count_dict, n=10):value_key_pairs = [(count, tz) for tz, count in count_dict.items()]value_key_pairs.sort()return value_key_pairs[-n:]

然后有:

In [21]: top_counts(counts)Out[21]:[(33, 'America/Sao_Paulo'),(35, 'Europe/Madrid'),(36, 'Pacific/Honolulu'),(37, 'Asia/Tokyo'),(74, 'Europe/London'),(191, 'America/Denver'),(382, 'America/Los_Angeles'),(400, 'America/Chicago'),(521, ''),(1251, 'America/New_York')]

如果你搜索Python的标准库,你能找到collections.Counter类,它可以使这项工作更简单:

In [22]: from collections import CounterIn [23]: counts = Counter(time_zones)In [24]: counts.most_common(10)Out[24]:[('America/New_York', 1251),('', 521),('America/Chicago', 400),('America/Los_Angeles', 382),('America/Denver', 191),('Europe/London', 74),('Asia/Tokyo', 37),('Pacific/Honolulu', 36),('Europe/Madrid', 35),('America/Sao_Paulo', 33)]

用pandas对时区进行计数

从原始记录的集合创建DateFrame,与将记录列表传递到pandas.DataFrame一样简单:

In [25]: import pandas as pdIn [26]: frame = pd.DataFrame(records)In [27]: frame.info()<class 'pandas.core.frame.DataFrame'>RangeIndex: 3560 entries, 0 to 3559Data columns (total 18 columns):_heartbeat_ 120 non-null float64a 3440 non-null objectal 3094 non-null objectc 2919 non-null objectcy 2919 non-null objectg 3440 non-null objectgr 2919 non-null objecth 3440 non-null objecthc 3440 non-null float64hh 3440 non-null objectkw 93 non-null objectl 3440 non-null objectll 2919 non-null objectnk 3440 non-null float64r 3440 non-null objectt 3440 non-null float64tz 3440 non-null objectu 3440 non-null objectdtypes: float64(4), object(14)memory usage: 500.7+ KBIn [28]: frame['tz'][:10]Out[28]:0 America/New_York1 America/Denver2 America/New_York3 America/Sao_Paulo4 America/New_York5 America/New_York6 Europe/Warsaw789Name: tz, dtype: object

这里frame的输出形式是摘要视图(summary view),主要用于较大的DataFrame对象。我们然后可以对Series使用value_counts方法:

In [29]: tz_counts = frame['tz'].value_counts()In [30]: tz_counts[:10]Out[30]:America/New_York 1251521America/Chicago 400America/Los_Angeles 382America/Denver 191Europe/London 74Asia/Tokyo 37Pacific/Honolulu 36Europe/Madrid 35America/Sao_Paulo 33Name: tz, dtype: int64

我们可以用matplotlib可视化这个数据。为此,我们先给记录中未知或缺失的时区填上一个替代值。fillna函数可以替换缺失值(NA),而未知值(空字符串)则可以通过布尔型数组索引加以替换:

In [31]: clean_tz = frame['tz'].fillna('Missing')In [32]: clean_tz[clean_tz == ''] = 'Unknown'In [33]: tz_counts = clean_tz.value_counts()In [34]: tz_counts[:10]Out[34]:America/New_York 1251Unknown 521America/Chicago 400America/Los_Angeles 382America/Denver 191Missing 120Europe/London 74Asia/Tokyo 37Pacific/Honolulu 36Europe/Madrid 35Name: tz, dtype: int64

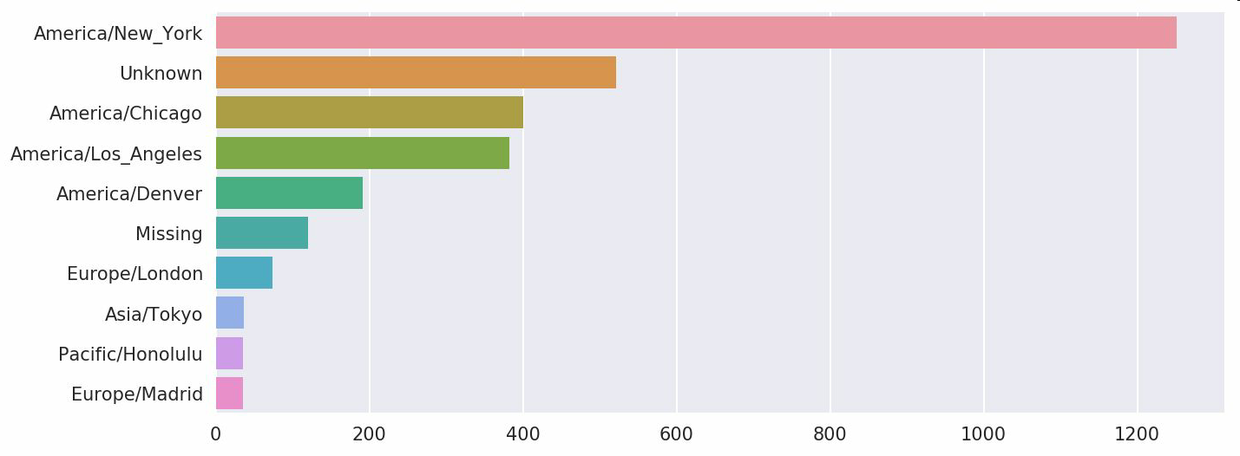

此时,我们可以用seaborn包创建水平柱状图(结果见图14-1):

In [36]: import seaborn as snsIn [37]: subset = tz_counts[:10]In [38]: sns.barplot(y=subset.index, x=subset.values)

a字段含有执行URL短缩操作的浏览器、设备、应用程序的相关信息:

In [39]: frame['a'][1]Out[39]: 'GoogleMaps/RochesterNY'In [40]: frame['a'][50]Out[40]: 'Mozilla/5.0 (Windows NT 5.1; rv:10.0.2)Gecko/20100101 Firefox/10.0.2'In [41]: frame['a'][51][:50] # long lineOut[41]: 'Mozilla/5.0 (Linux; U; Android 2.2.2; en-us; LG-P9'

将这些”agent”字符串中的所有信息都解析出来是一件挺郁闷的工作。一种策略是将这种字符串的第一节(与浏览器大致对应)分离出来并得到另外一份用户行为摘要:

In [42]: results = pd.Series([x.split()[0] for x in frame.a.dropna()])In [43]: results[:5]Out[43]:0 Mozilla/5.01 GoogleMaps/RochesterNY2 Mozilla/4.03 Mozilla/5.04 Mozilla/5.0dtype: objectIn [44]: results.value_counts()[:8]Out[44]:Mozilla/5.0 2594Mozilla/4.0 601GoogleMaps/RochesterNY 121Opera/9.80 34TEST_INTERNET_AGENT 24GoogleProducer 21Mozilla/6.0 5BlackBerry8520/5.0.0.681 4dtype: int64

现在,假设你想按Windows和非Windows用户对时区统计信息进行分解。为了简单起见,我们假定只要agent字符串中含有”Windows”就认为该用户为Windows用户。由于有的agent缺失,所以首先将它们从数据中移除:

In [45]: cframe = frame[frame.a.notnull()]

然后计算出各行是否含有Windows的值:

In [47]: cframe['os'] = np.where(cframe['a'].str.contains('Windows'),....: 'Windows', 'Not Windows')In [48]: cframe['os'][:5]Out[48]:0 Windows1 Not Windows2 Windows3 Not Windows4 WindowsName: os, dtype: object

接下来就可以根据时区和新得到的操作系统列表对数据进行分组了:

In [49]: by_tz_os = cframe.groupby(['tz', 'os'])

分组计数,类似于value_counts函数,可以用size来计算。并利用unstack对计数结果进行重塑:

In [50]: agg_counts = by_tz_os.size().unstack().fillna(0)In [51]: agg_counts[:10]Out[51]:os Not Windows Windowstz245.0 276.0Africa/Cairo 0.0 3.0Africa/Casablanca 0.0 1.0Africa/Ceuta 0.0 2.0Africa/Johannesburg 0.0 1.0Africa/Lusaka 0.0 1.0America/Anchorage 4.0 1.0America/Argentina/Buenos_Aires 1.0 0.0America/Argentina/Cordoba 0.0 1.0America/Argentina/Mendoza 0.0 1.0

最后,我们来选取最常出现的时区。为了达到这个目的,我根据agg_counts中的行数构造了一个间接索引数组:

# Use to sort in ascending orderIn [52]: indexer = agg_counts.sum(1).argsort()In [53]: indexer[:10]Out[53]:tz24Africa/Cairo 20Africa/Casablanca 21Africa/Ceuta 92Africa/Johannesburg 87Africa/Lusaka 53America/Anchorage 54America/Argentina/Buenos_Aires 57America/Argentina/Cordoba 26America/Argentina/Mendoza 55dtype: int64

然后我通过take按照这个顺序截取了最后10行最大值:

In [54]: count_subset = agg_counts.take(indexer[-10:])In [55]: count_subsetOut[55]:os Not Windows WindowstzAmerica/Sao_Paulo 13.0 20.0Europe/Madrid 16.0 19.0Pacific/Honolulu 0.0 36.0Asia/Tokyo 2.0 35.0Europe/London 43.0 31.0America/Denver 132.0 59.0America/Los_Angeles 130.0 252.0America/Chicago 115.0 285.0245.0 276.0America/New_York 339.0 912.0

pandas有一个简便方法nlargest,可以做同样的工作:

In [56]: agg_counts.sum(1).nlargest(10)Out[56]:tzAmerica/New_York 1251.0521.0America/Chicago 400.0America/Los_Angeles 382.0America/Denver 191.0Europe/London 74.0Asia/Tokyo 37.0Pacific/Honolulu 36.0Europe/Madrid 35.0America/Sao_Paulo 33.0dtype: float64

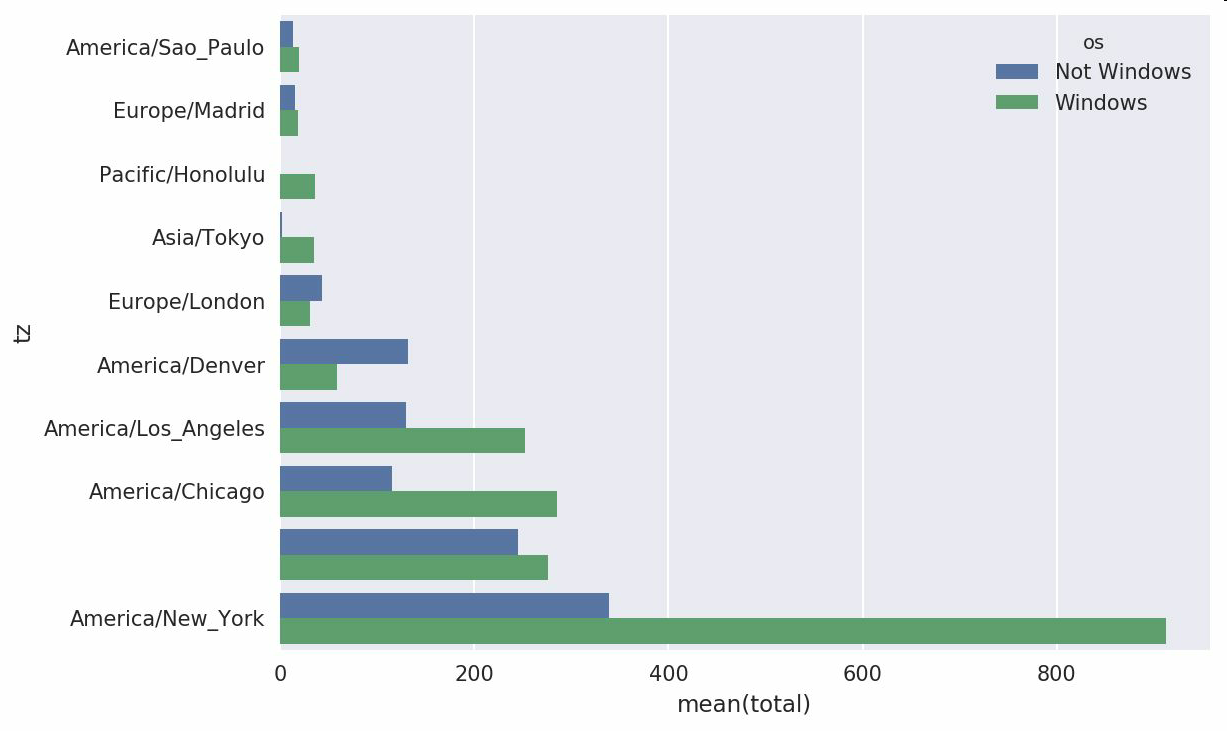

然后,如这段代码所示,可以用柱状图表示。我传递一个额外参数到seaborn的barpolt函数,来画一个堆积条形图(见图14-2):

# Rearrange the data for plottingIn [58]: count_subset = count_subset.stack()In [59]: count_subset.name = 'total'In [60]: count_subset = count_subset.reset_index()In [61]: count_subset[:10]Out[61]:tz os total0 America/Sao_Paulo Not Windows 13.01 America/Sao_Paulo Windows 20.02 Europe/Madrid Not Windows 16.03 Europe/Madrid Windows 19.04 Pacific/Honolulu Not Windows 0.05 Pacific/Honolulu Windows 36.06 Asia/Tokyo Not Windows 2.07 Asia/Tokyo Windows 35.08 Europe/London Not Windows 43.09 Europe/London Windows 31.0In [62]: sns.barplot(x='total', y='tz', hue='os', data=count_subset)

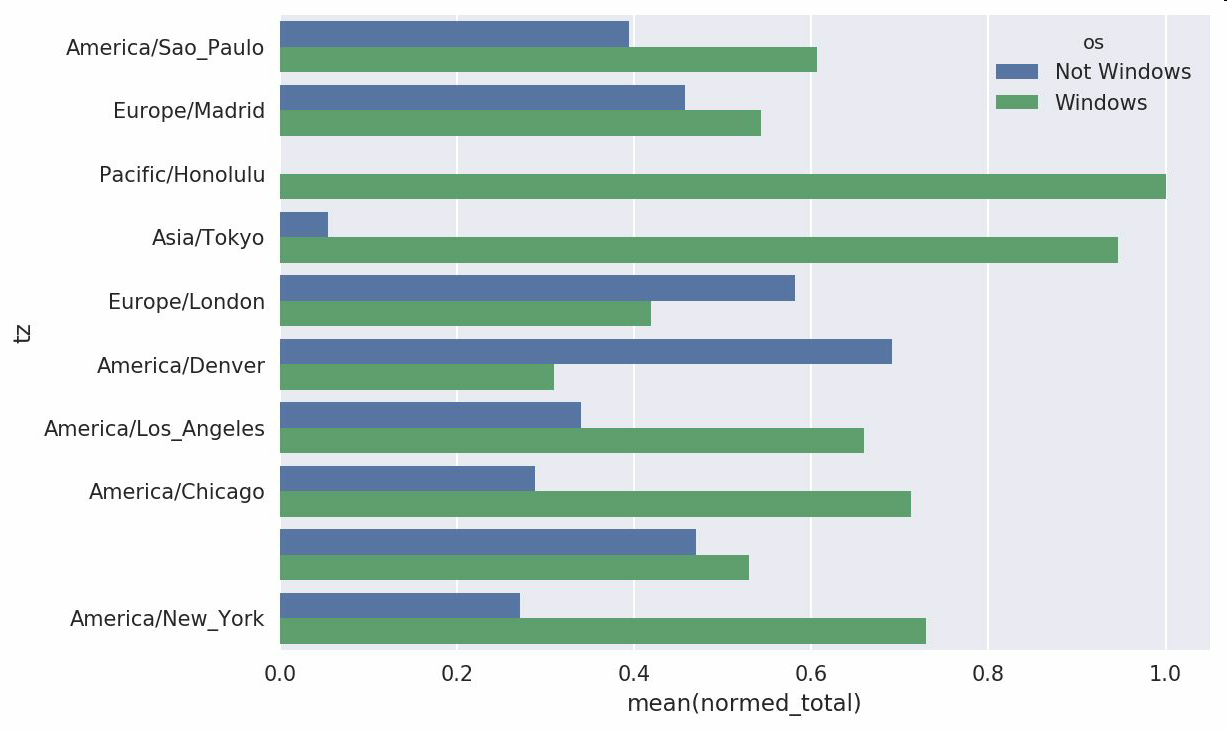

这张图不容易看出Windows用户在小分组中的相对比例,因此标准化分组百分比之和为1:

def norm_total(group):group['normed_total'] = group.total / group.total.sum()return groupresults = count_subset.groupby('tz').apply(norm_total)

再次画图,见图14-3:

In [65]: sns.barplot(x='normed_total', y='tz', hue='os', data=results)

我们还可以用groupby的transform方法,更高效的计算标准化的和:

In [66]: g = count_subset.groupby('tz')In [67]: results2 = count_subset.total / g.total.transform('sum')